You might assume artificial intelligence (AI) can easily detect other AI-generated content, but it’s not that simple.

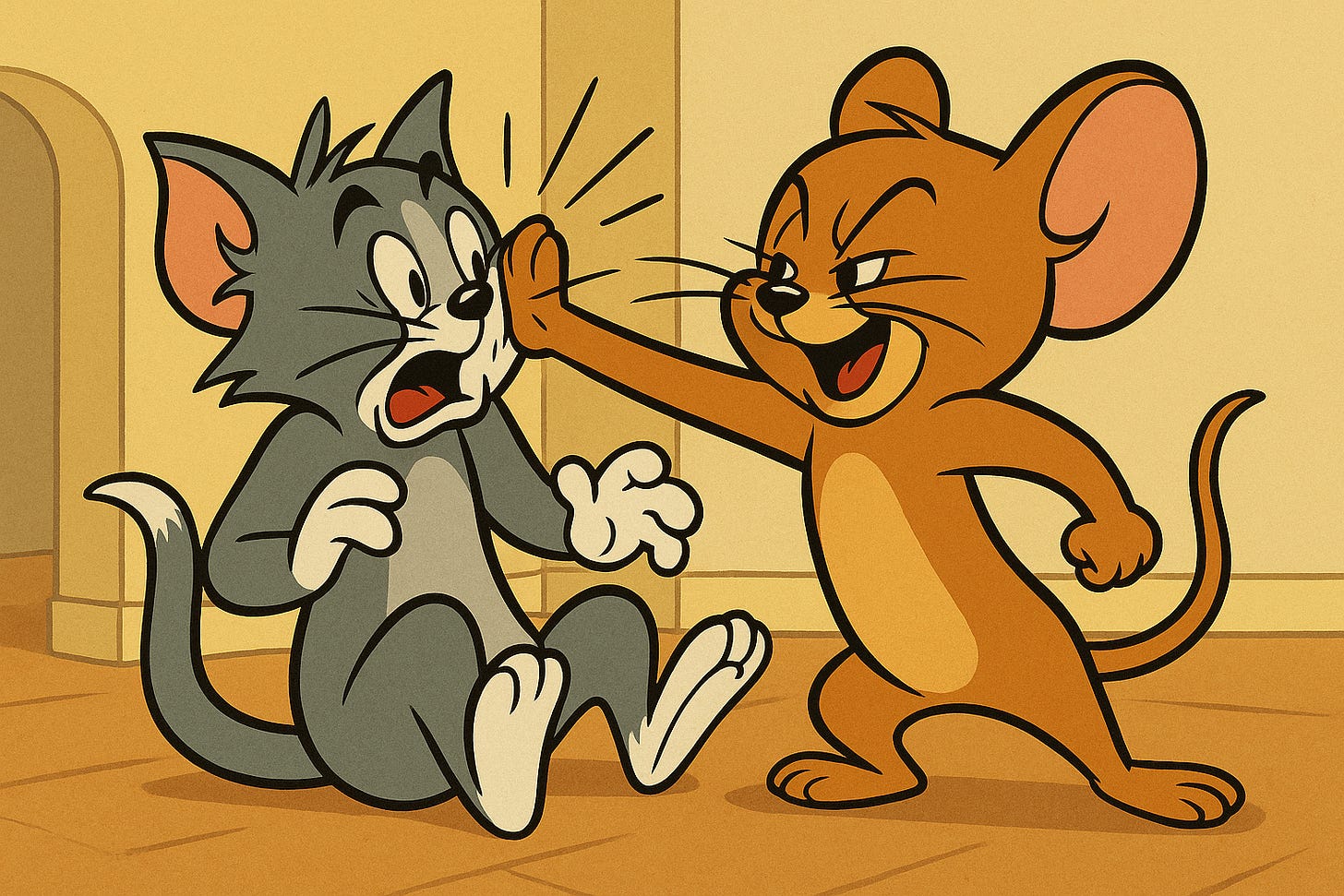

Here’s why detection is still a cat-and-mouse game, where the mouse is still winning:

Generative AI keeps evolving, quickly outpacing detection tools. As soon as a detector learns certain “tells,” new models adapt and erase them. There’s also no universal “AI fingerprint”—each model leaves behind different traces, making a one-size-fits-all solution impossible.

Things get trickier when humans tweak AI-generated content, masking any remaining clues. And with both creation and detection models often being black boxes, it’s hard to know why detection fails or how to improve it.

Listen to this podcast* to know why AI detection tools kinda fail to meet the mark on fully AI-generated content, and why they also struggle with edited or partially AI-enhanced material, complicating efforts to catch plagiarism and prevent misinformation, especially in academia, journalism, and law.

(*Podcast generated by Google Notebook Lm)

Subscribe to “Ditch The Scroll”, a newsletter about digital minimalism.

Share this post